!pip install numpy pandas spacy transformers scikit-learn langchain_communityCe chapitre va évoluer prochainement.

1 Introduction

This page builds on certain aspects presented in the introductory section. We will advance our understanding of NLP issues through language modeling.

We start from the conclusion noted at the end of the previous chapter: frequentist approaches have several shortcomings, such as modeling language based on statistical regularities without considering word or phrase proximity, and difficulty incorporating context.

The aim of this chapter is to address the first of those points. This will serve as an introduction to embeddings, the language representations at the core of modern language models used in everyday tools like DeepL or ChatGPT.

1.1 Data Used

We will continue our exploration of literature using the same three English-language authors:

- Edgar Allan Poe (EAP);

- HP Lovecraft (HPL);

- Mary Wollstonecraft Shelley (MWS).

The dataset is available in a CSV file hosted on Github, and can be directly downloaded from:

https://github.com/GU4243-ADS/spring2018-project1-ginnyqg/raw/master/data/spooky.csv.

To explore the topic of embeddings, we will use a language modeling task: predicting the author of a given text. A language model represents a text or language as a probability distribution over terms (usually words).

This chapter is inspired by several online resources:

1.2 Required Packages

As in the previous section, we need to install specialized NLP libraries along with their dependencies. This tutorial will use several libraries, including some that depend on PyTorch, which is a large framework.

The following note is only relevant for users of SSPCloud.

The standard Python services on SSPCloud (such as vscode-python and jupyter-python) do not include PyTorch by default. This library is quite large (around 600MB) and requires specific configuration to work seamlessly across different software environments. For ecological sustainability, this enhanced environment is not provided by default. However, when needed, an environment with PyTorch preinstalled is available.

To access it, simply start a vscode-pytorch or jupyter-pytorch service. If you used one of the buttons above, this pre-configured service was automatically launched for you.

Next, since we will be using the SpaCy library with a corpus of English texts,

we need to download the English NLP model. For this, you can refer to

the official SpaCy documentation,

which is extremely well-designed.

!python -m spacy download en_core_web_sm2 Data Preparation

We will once again use the spooky dataset:

import pandas as pd

data_url = 'https://github.com/GU4243-ADS/spring2018-project1-ginnyqg/raw/master/data/spooky.csv'

spooky_df = pd.read_csv(data_url)The dataset pairs each author with a sentence they wrote:

spooky_df.head()| id | text | author | |

|---|---|---|---|

| 0 | id26305 | This process, however, afforded me no means of... | EAP |

| 1 | id17569 | It never once occurred to me that the fumbling... | HPL |

| 2 | id11008 | In his left hand was a gold snuff box, from wh... | EAP |

| 3 | id27763 | How lovely is spring As we looked from Windsor... | MWS |

| 4 | id12958 | Finding nothing else, not even gold, the Super... | HPL |

2.1 Preprocessing

As discussed in the previous chapter, the first step in any work with textual data is often preprocessing, which typically includes tokenization and text cleaning.

Here, we will stick to minimal preprocessing: removing punctuation and stop words (for visualization and count-based vectorization methods).

To begin the cleaning process,

we will use the en_core_web_sm model from Spacy

import spacy

nlp = spacy.load('en_core_web_sm')We will use a spacy pipe that automates and parallelizes

a number of operations. Pipes in NLP are similar to

scikit pipelines or pandas pipes. They are well-suited tools

for industrializing various preprocessing tasks:

Preprocessing de la base textuelle

from typing import List

import spacy

def clean_docs(

texts: List[str],

remove_stopwords: bool = False,

n_process: int = 4,

remove_punctuation: bool = True

) -> List[str]:

"""

Cleans a list of text documents by tokenizing, optionally removing stopwords, and optionally removing punctuation.

Parameters:

texts (List[str]): List of text documents to clean.

remove_stopwords (bool): Whether to remove stopwords. Default is False.

n_process (int): Number of processes to use for processing. Default is 4.

remove_punctuation (bool): Whether to remove punctuation. Default is True.

Returns:

List[str]: List of cleaned text documents.

"""

# Load spacy's nlp model

docs = nlp.pipe(

texts,

n_process=n_process,

disable=['parser', 'ner', 'lemmatizer', 'textcat']

)

# Pre-load stopwords for faster checking

stopwords = set(nlp.Defaults.stop_words)

# Process documents

docs_cleaned = (

' '.join(

tok.text.lower().strip()

for tok in doc

if (not remove_punctuation or not tok.is_punct) and

(not remove_stopwords or tok.text.lower() not in stopwords)

)

for doc in docs

)

return list(docs_cleaned)We apply the clean_docs function to our pandas column.

Since pandas.Series are iterable, they behave like lists and

work very well with our spacy pipe.

spooky_df['text_clean'] = clean_docs(spooky_df['text'])spooky_df.head()| id | text | author | text_clean | |

|---|---|---|---|---|

| 0 | id26305 | This process, however, afforded me no means of... | EAP | this process however afforded me no means of a... |

| 1 | id17569 | It never once occurred to me that the fumbling... | HPL | it never once occurred to me that the fumbling... |

| 2 | id11008 | In his left hand was a gold snuff box, from wh... | EAP | in his left hand was a gold snuff box from whi... |

| 3 | id27763 | How lovely is spring As we looked from Windsor... | MWS | how lovely is spring as we looked from windsor... |

| 4 | id12958 | Finding nothing else, not even gold, the Super... | HPL | finding nothing else not even gold the superin... |

2.2 Encoding the Target Variable

We perform a simple encoding of the target variable:

there are three categories (authors), represented by integers 0, 1, and 2.

For this, we use Scikit’s LabelEncoder, previously introduced

in the modeling section. We will use the fit_transform method, which conveniently combines

fitting (i.e., creating a mapping between numerical values and labels)

and transforming the same column in one step.

We can check the classes of our LabelEncoder:

array(['EAP', 'HPL', 'MWS'], dtype=object)2.3 Creating the Training and Test Sets

We set aside a test sample (20%) before performing any analysis (even descriptive). This ensures a rigorous evaluation of our models at the end, since these data will never have been seen during training.

Our initial dataset is not balanced—some authors have more texts than others. To ensure fair evaluation of our model, we will stratify the sampling so that the training and test sets contain a similar distribution of authors.

from sklearn.model_selection import train_test_split

y = spooky_df["author"]

X = spooky_df['text_clean']

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, stratify=y, random_state=42

)Preview of the first element in X_train:

'this process however afforded me no means of ascertaining the dimensions of my dungeon as i might make its circuit and return to the point whence i set out without being aware of the fact so perfectly uniform seemed the wall'3 Vectorization Using the Bag of Words Approach

Representing our texts as a bag of words allows us to vectorize the corpus and thus obtain a numerical representation of each text. From there, we can perform various types of modeling tasks.

Let’s define our vector representation using TF-IDF with Scikit:

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.pipeline import Pipeline

pipeline_tfidf = Pipeline([

('tfidf', TfidfVectorizer(max_features=10000)),

])

pipeline_tfidfPipeline(steps=[('tfidf', TfidfVectorizer(max_features=10000))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

Parameters

Let’s go ahead and train our model to vectorize the text using the TF-IDF method. At this stage, we are not yet concerned with evaluation, so we will train on the entire dataset, not just X_train.

pipeline_tfidf.fit(spooky_df['text_clean'])Pipeline(steps=[('tfidf', TfidfVectorizer(max_features=10000))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

Parameters

3.1 Finding the Most Similar Text

First, we can look for the text that is closest—according to TF-IDF similarity—to a given sentence. Let’s take the following example:

text = "He was afraid by Frankenstein monster"How can we find the text most similar to this one? We need to transform our sentence into the same vector representation, then compare it to the other texts using that same form.

This is essentially an information retrieval task—a classic NLP use case—implemented, for example, by search engines. Since the term “Frankenstein” is quite distinctive, we should be able to identify similarities with other texts written by Mary Shelley using TF-IDF.

A metric commonly used to compare vectors is cosine similarity. This is a central measure in modern NLP. While it is more meaningful with dense vectors (which we’ll explore soon), it still provides a useful exercise for understanding similarity between two vectors, even when those vectors are sparse, as in the bag-of-words approach.

If each dimension of a vector represents a direction, cosine similarity measures the angle between two vectors. The smaller the angle, the closer the vectors.

3.1.1 With Scikit-Learn

Use the

transformmethod to vectorize the entire training corpus.Assuming your vectorized training set is named

X_train_tfidf, you can convert it to a DataFrame with the following command:

X_train_tfidf = pd.DataFrame(

X_train_tfidf.todense(), columns=pipeline_tfidf.get_feature_names_out()

)- Use

Scikit’scosine_similaritymethod to compute cosine similarity between your vectorized text and the training corpus using this code:

import numpy as np

from sklearn.metrics.pairwise import cosine_similarity

cosine_similarities = cosine_similarity(

X_train_tfidf,

pipeline_tfidf.transform([text])

).flatten()

top_4_indices = np.argsort(cosine_similarities)[-4:][::-1] # Descending sort

top_4_similarities = cosine_similarities[top_4_indices]- Retrieve the corresponding documents. Are you satisfied with the result? Do you understand what happened?

A l’issue de l’exercice, les 4 textes les plus similaires sont:

| text | author | score | |

|---|---|---|---|

| 8181 | Listen to me, Frankenstein. | MWS | 0.402964 |

| 8606 | He was gazing at me gaspingly and fascinatedly... | HPL | 0.330177 |

| 14550 | The light is dimmer and the gods are afraid. . . | HPL | 0.314670 |

| 11366 | I screamed aloud that I was not afraid; that I... | HPL | 0.311235 |

3.1.2 With Langchain

This approach to computing text similarity is rather tedious with Scikit. With the rapid development of Python applications leveraging language models, a rich ecosystem has emerged to make these tasks achievable in just a few lines of code.

Among the most valuable tools is Langchain, a high-level Python ecosystem for building production-ready pipelines using textual data.

We will proceed here in two steps:

- Create a retriever, which involves vectorizing our corpus (texts from the three authors) using TF-IDF and storing it in a vector database.

- Vectorize our search query (

text, created earlier) on the fly and retrieve its closest match from the vector database.

Vectorizing our corpus is very straightforward using Langchain,

as Scikit’s TfidfVectorizer is wrapped in a dedicated module provided by Langchain.

from langchain_community.retrievers import TFIDFRetriever

from langchain_community.document_loaders import DataFrameLoader

loader = DataFrameLoader(spooky_df, page_content_column="text_clean")

retriever = TFIDFRetriever.from_documents(

loader.load()

)This retriever object serves as an entry point to our corpus. Langchain is particularly valuable in NLP projects because it provides standardized entry points, allowing you to easily switch out vectorizers without needing to change how the results are used at the end of the pipeline.

The invoke method is used to find the most similar vectors to our search query:

retriever.invoke(text)[Document(metadata={'id': 'id12587', 'text': 'Listen to me, Frankenstein.', 'author': 'MWS', 'author_encoded': 2}, page_content='listen to me frankenstein'),

Document(metadata={'id': 'id09284', 'text': 'I screamed aloud that I was not afraid; that I never could be afraid; and others screamed with me for solace.', 'author': 'HPL', 'author_encoded': 1}, page_content='i screamed aloud that i was not afraid that i never could be afraid and others screamed with me for solace'),

Document(metadata={'id': 'id09797', 'text': 'It seemed to be a sort of monster, or symbol representing a monster, of a form which only a diseased fancy could conceive.', 'author': 'HPL', 'author_encoded': 1}, page_content='it seemed to be a sort of monster or symbol representing a monster of a form which only a diseased fancy could conceive'),

Document(metadata={'id': 'id10816', 'text': 'And, as I have implied, it was not of the dead man himself that I became afraid.', 'author': 'HPL', 'author_encoded': 1}, page_content='and as i have implied it was not of the dead man himself that i became afraid')]The output is a Langchain object, which is not convenient for our purposes here. We convert it into a DataFrame:

documents = []

for best_echoes in retriever.invoke(text):

documents += [{**best_echoes.metadata, **{"text_clean": best_echoes.page_content}}]

documents = pd.DataFrame(documents)We can add the similarity score column to this DataFrame:

We do indeed retrieve the same documents:

| id | text | author | author_encoded | text_clean | score | |

|---|---|---|---|---|---|---|

| 0 | id12587 | Listen to me, Frankenstein. | MWS | 2 | listen to me frankenstein | 0.402964 |

| 1 | id09284 | I screamed aloud that I was not afraid; that I... | HPL | 1 | i screamed aloud that i was not afraid that i ... | 0.311235 |

| 2 | id09797 | It seemed to be a sort of monster, or symbol r... | HPL | 1 | it seemed to be a sort of monster or symbol re... | 0.295587 |

| 3 | id10816 | And, as I have implied, it was not of the dead... | HPL | 1 | and as i have implied it was not of the dead m... | 0.261818 |

BM25 is a probabilistic relevance-based information retrieval model, similar to TF-IDF. It is commonly used in search engines to rank documents relative to a query.

BM25 combines term frequency (TF), inverse document frequency (IDF), and a normalization based on document length. In other words, it improves on TF-IDF by adjusting scores based on string length to avoid overemphasizing longer documents.

BM25 performs particularly well in environments where documents vary in length and content. This is why search engines such as Elasticsearch have made it a cornerstone of their search mechanisms.

Why aren’t all results relevant? We can anticipate several reasons.

The first hypothesis is that we’re training our vectorizer on a biased corpus. While “Frankenstein” is a rare term, it appears more frequently in our dataset than in general English usage. The inverse document frequency is thus biased against the term: its appearance should be a much stronger indicator that the text belongs to Mary Shelley. While addressing this might slightly improve relevance, it’s not the core issue.

The frequentist approach assumes all terms are equally distinct. A sentence containing the word “creature” won’t get a higher score when searching for “monster”. Again, we’ve treated our corpus as a bag where words are independent—there’s no increased likelihood of encountering “Frankenstein” after “doctor”. These limitations point us toward the topic of embeddings. Even though the frequentist method may seem a bit old school, it’s not useless and often provides a “tough to beat baseline.” In fields like information extraction from short texts, where every term carries strong signal, this approach is often effective.

4 The Word2Vec model, a more synthetic representation

4.1 Towards a more synthetic representation of language

The vector representation resulting from the bag of words approach is not very synthetic or stable and is quite crude.

If we have a small corpus, we will have problems extrapolating since new texts are very likely to bring new words, which are new feature dimensions that were not present in the training corpus. This is conceptually a problem since machine learning algorithms are not intended to predict on characteristics they have not been trained on1.

Conversely, the more text we have in a corpus, the larger our vector representation will be. For example, if your bag of words has seen the entire French vocabulary, which is 60,000 words according to the French Academy (estimates being 200,000 for the English language), this results in vectors of considerable size. However, the diversity of texts is, in practice, much lower: common use of French requires around 3,000 words and most texts, especially if they are short, do not use such a complete vocabulary. This therefore implies very sparse vectors, with many 0s.

Vectorization according to this approach is therefore inefficient; the signal is poorly compressed. Dense representations, that is, of smaller dimension but all carrying information, seem more adequate to be able to generalize our language modeling.

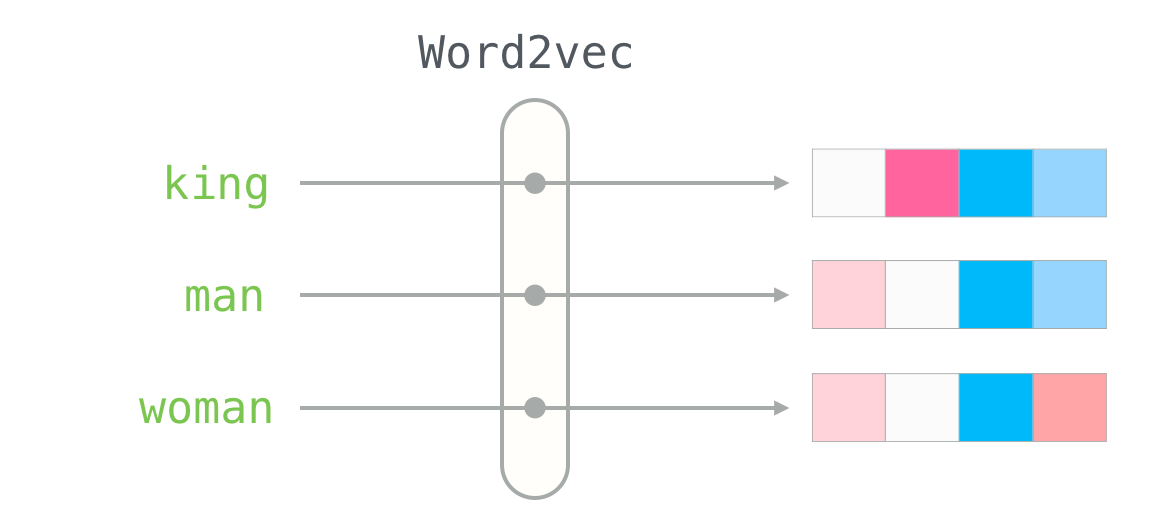

The algorithm that made this approach famous is the Word2Vec model, in some ways the first common ancestor of modern LLMs. The vector representation of Word2Vec is quite synthetic: the dimension of these embeddings is between 100 and 300.

4.2 Semantic relationships between terms

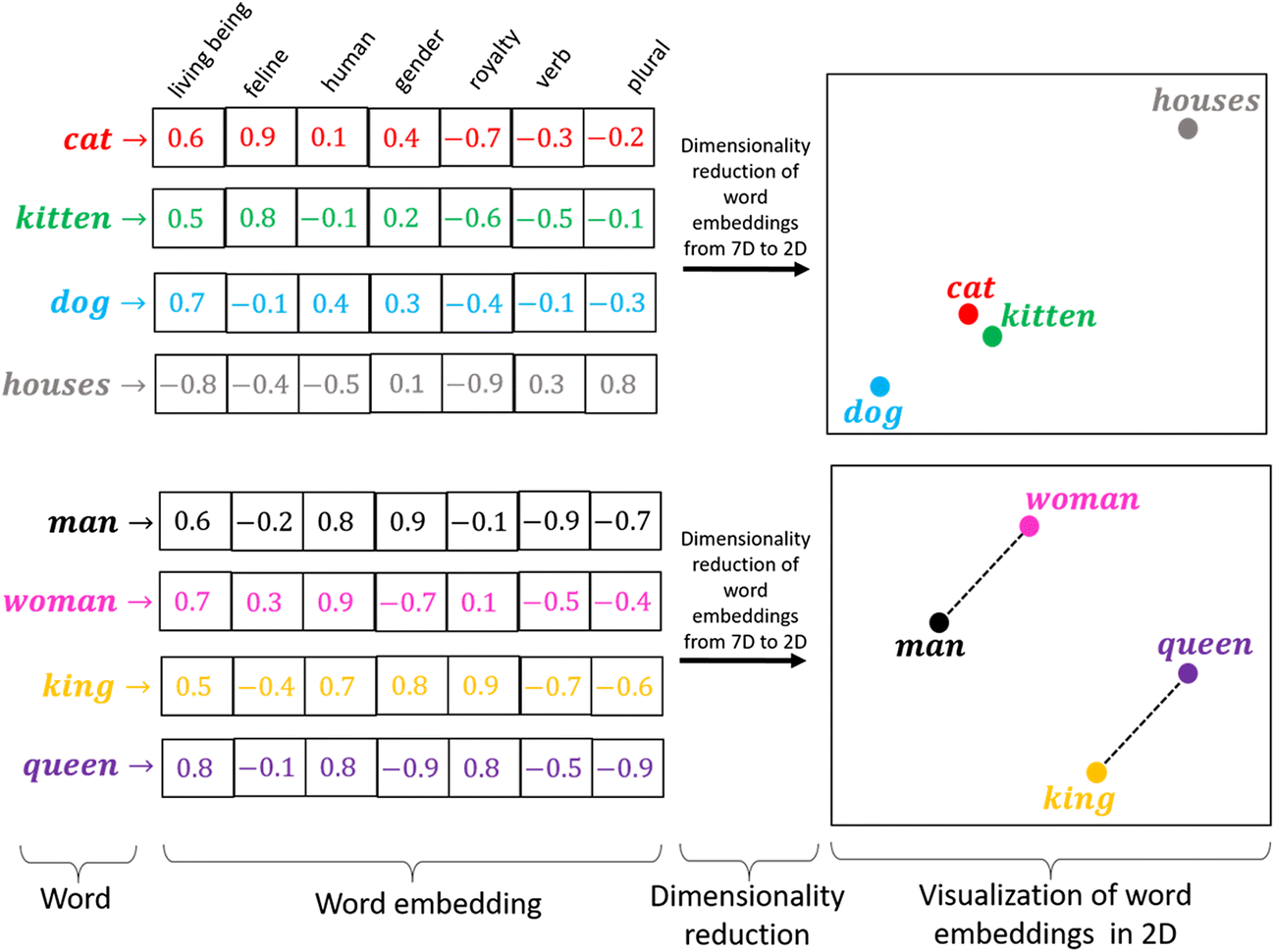

This dense representation will represent a solution to a limitation of the bag of words approach that we have mentioned multiple times. Each of these dimensions will represent a latent factor, that is, an unobserved variable, in the same way as principal components produced by a PCA. These latent dimensions can be interpreted as “fundamental” dimensions of language.

For example, a human knows that a document containing the word “King”

and another document containing the word “Queen” are very likely

to address similar subjects. A well-trained Word2Vec model will capture

that there exists a latent factor of type “royalty”

and the similarity between the vectors associated with the two words will be strong.

The magic goes even further: the model will also capture that there exists a latent factor of type “gender”, and will allow the construction of a semantic space in which arithmetic relationships between vectors make sense. For example,

\[ \text{king} - \text{man} + \text{woman} ≈ \text{queen} \]

or, to revisit the example from the original Word2Vec paper (Mikolov 2013),

\[ \text{Paris} - \text{France} + \text{Italy} ≈ \text{Rome} \]

Another “miracle” of this approach is that it allows a form of transfer between languages. Since semantic relationships can be similar across languages, many common words can be mapped between languages if they share a common base (such as Western languages). This concept is the foundation of automatic translators and multilingual AI systems.

4.3 How are these models trained?

These models are trained from a prediction task solved by a simple neural network, generally with a reinforcement approach.

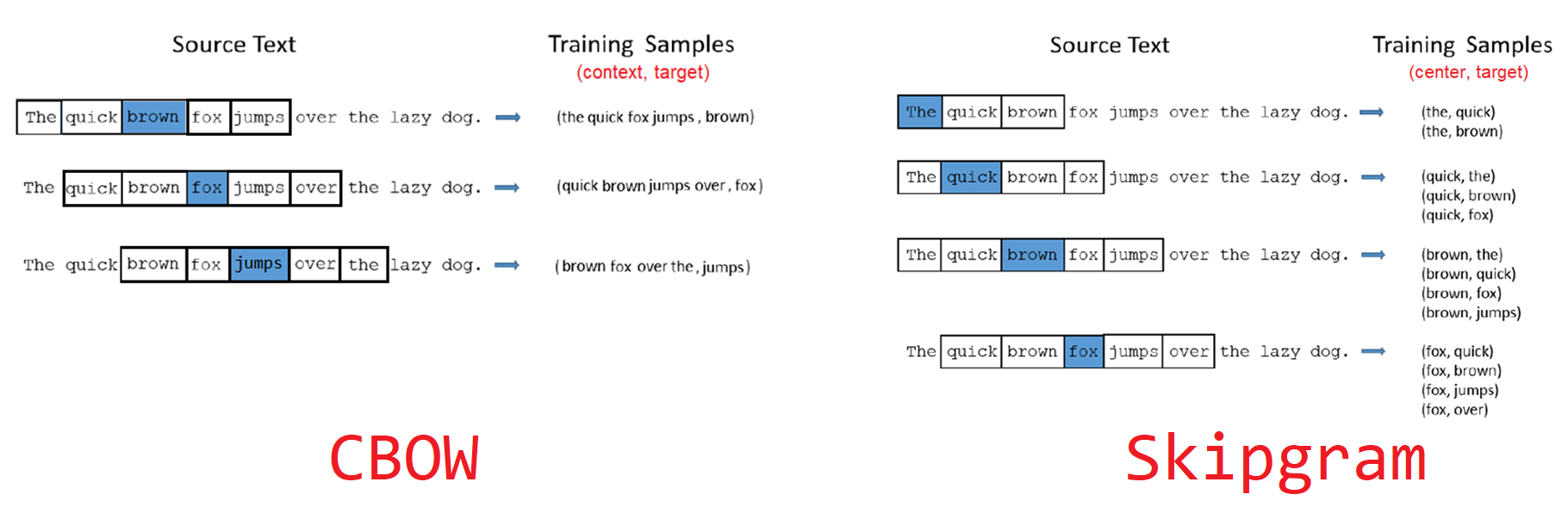

The fundamental idea is that the meaning of a word is understood by looking at words that frequently appear in its neighborhood. For a given word, we will therefore try to predict the words that appear in a window around the target word.

By repeating this task many times and on a sufficiently varied corpus,

we finally obtain embeddings for each word in the vocabulary,

which present the properties discussed previously. The collection of Wikipedia articles is one of the preferred corpora for people who have built lexical

embeddings. It indeed contains complete sentences, unlike information from social media comments,

and proposes interesting connections between people, places, etc.

The context of a word is defined by a fixed-size window around this word. The window size is a parameter of the embedding construction. The corpus provides a large set of word-context examples, which can be used to train a neural network.

More precisely, there are two approaches, whose details we will not develop:

- Continuous bag of words (CBOW), where the model is trained to predict a word from its context;

- Skip-gram, where the model attempts to predict the context from a single word.

5 Transformers: a richer representation of language

While the Word2Vec model is trained contextually, its purpose is to give a vector representation of a word in an absolute manner, independent of context. For example, the term “bank” will have exactly the same vector representation whether it appears in the sentence “She runs towards the sandbank” or “He’s waiting for you on a bench in the park”. This is a major limitation of this type of approach and we can well imagine the importance of context for language interpretation.

The objective of transformer architectures is to enable contextual vector representations. In other words, a word will have several vector representations, depending on its context of occurrence. These models rely on the attention mechanism (Vaswani 2017). Before this approach, when a model learned to vectorize a text and reached the nth word, the only memory it kept was that of the previous word. By recurrence, this meant it kept a memory of previous words but this tended to dissipate. Consequently, for a word appearing far in the sentence, it was likely that the context from the beginning of the sentence was forgotten. In other words, in the sentence “at the beach, he was going to explore the bank”, it was very likely that upon reaching the word “bank”, the model had forgotten the beginning of the sentence which was nevertheless important for interpretation.

The objective of the attention mechanism is to create an internal memory in the model allowing, for any word in a text, to keep track of other words. Of course, not all are relevant for interpreting the text but this avoids forgetting those that are important. The main innovation of recent years in NLP has been to manage to create large-scale attention mechanisms without making the models intractable. The context windows of the most performant models are becoming immense. For example, the Llama 3.1 model (made public by Meta in July 2024) offers a context window of 128,000 tokens, or about 96,000 words, the equivalent of Tolkien’s Hobbit. In other words, to deduce the subtlety of a word’s meaning, this model can browse through a context as long as a novel of about 300 pages.

The two models that marked their era in the field are the BERT models developed in 2018 by Google (which was already behind Word2Vec) and the first version of the well-known GPT from OpenAI, which, in 2017, was the first pre-trained model based on the transformer architecture. These two transformer families differ in how they integrate context to make a prediction. GPT is an autoregressive model, therefore only considers the tokens before the one we want to predict. BERT uses the tokens to the left and right to infer context. These two major trained language models are trained by self-reinforcement, mainly on next token prediction tasks (“The Hugging Face Course, 2022” 2022). Since the success of ChatGPT, the new GPT models (from version 3 onwards) are no longer open source. To use them, one must therefore go through OpenAI’s APIs. There are nevertheless many alternatives whose weights are open, if not open source2, which allow using these LLMs through Python, notably through the transformers library developed by Hugging Face.

When working with small-sized corpora, it’s generally a bad idea to train your own model from scratch. Fortunately, models pre-trained on very large corpora are available. They allow for transfer learning, that is, to benefit from the performance of a model that has been trained on another task or on another corpus.

- Repeat a train/test split with 500 random lines

- Import the

all-MiniLM-L6-v2model with thesentence transformerspackage. EncodeX_trainandX_test. - Perform a classification using a simple method, such as CVS, based on the embeddings produced in the previous question. As the training set is small, you can perform cross-validation.

- Understand why the performance is worse than that of Bayes’ naive classifier.

Answer to question 1:

random_rows = spooky_df.sample(500)

y = random_rows["author"]

X = random_rows['text']

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, stratify=y, random_state=42

)Réponse à la question 2:

from sentence_transformers import SentenceTransformer

from sklearn.svm import LinearSVC

model = SentenceTransformer(

"all-MiniLM-L6-v2", model_kwargs={"torch_dtype": "float16"}

)

X_train_vectors = model.encode(X_train.values)

X_test_vectors = model.encode(X_test.values)Answer to question 3:

from sklearn.model_selection import cross_val_score

clf = LinearSVC(max_iter=10000, C=0.1, dual="auto")

scores = cross_val_score(

clf, X_train_vectors, y_train,

cv=4, scoring='f1_micro', n_jobs=4

)

print(f"CV scores {scores}")

print(f"Mean F1 {np.mean(scores)}")But why, with a very complicated method, can’t we beat a very simple one?

There are several possible reasons:

- the TF-IDF is a simple model, but it still performs very well (this is known as a ‘tough-to-beat baseline’).

- the classification of authors is a very specific and arduous task, which does not do justice to the embeddings. As we said earlier, the latter are particularly relevant when it comes to semantic similarity between texts (clustering, etc.).

In the case of our classification task, it is likely that certain words (character names, place names) are sufficient to classify in a relevant way, This is not captured by embeddings, which give all words the same importance.

Informations additionnelles

This site was built automatically through a Github action using the Quarto

The environment used to obtain the results is reproducible via uv. The pyproject.toml file used to build this environment is available on the linogaliana/python-datascientist repository

pyproject.toml

[project]

name = "python-datascientist"

version = "0.1.0"

description = "Source code for Lino Galiana's Python for data science course"

readme = "README.md"

requires-python = ">=3.13,<3.14"

dependencies = [

"altair>=6.0.0",

"black==24.8.0",

"cartiflette",

"contextily==1.6.2",

"duckdb>=0.10.1",

"folium>=0.19.6",

"gdal!=3.11.1",

"geoplot==0.5.1",

"graphviz==0.20.3",

"great-tables>=0.12.0",

"gt-extras>=0.0.8",

"ipykernel>=6.29.5",

"jupyter>=1.1.1",

"jupyter-cache==1.0.0",

"kaleido==0.2.1",

"langchain-community>=0.3.27",

"loguru==0.7.3",

"markdown>=3.8",

"nbclient==0.10.0",

"nbformat==5.10.4",

"nltk>=3.9.1",

"pip>=25.1.1",

"plotly>=6.1.2",

"plotnine>=0.15",

"polars==1.8.2",

"pyarrow>=17.0.0",

"pynsee==0.1.8",

"python-dotenv==1.0.1",

"python-frontmatter>=1.1.0",

"pywaffle==1.1.1",

"requests>=2.32.3",

"scikit-image==0.24.0",

"scipy>=1.13.0",

"selenium<4.39.0",

"spacy>=3.8.4",

"webdriver-manager==4.0.2",

"wordcloud==1.9.3",

]

[tool.uv.sources]

cartiflette = { git = "https://github.com/inseefrlab/cartiflette" }

gdal = [

{ index = "gdal-wheels", marker = "sys_platform == 'linux'" },

{ index = "geospatial_wheels", marker = "sys_platform == 'win32'" },

]

[[tool.uv.index]]

name = "geospatial_wheels"

url = "https://nathanjmcdougall.github.io/geospatial-wheels-index/"

explicit = true

[[tool.uv.index]]

name = "gdal-wheels"

url = "https://gitlab.com/api/v4/projects/61637378/packages/pypi/simple"

explicit = true

[dependency-groups]

dev = [

"nb-clean>=4.0.1",

]

To use exactly the same environment (version of Python and packages), please refer to the documentation for uv.

| SHA | Date | Author | Description |

|---|---|---|---|

| 794ce14a | 2025-09-15 16:21:42 | lgaliana | retouche quelques abstracts |

| ca020f2a | 2025-08-22 09:25:48 | Lino Galiana | énième try/except pour l’API d’exemple de l’APE |

| 99ab48b0 | 2025-07-25 18:50:15 | Lino Galiana | Utilisation des callout classiques pour les box notes and co (#629) |

| 94648290 | 2025-07-22 18:57:48 | Lino Galiana | Fix boxes now that it is better supported by jupyter (#628) |

| 91431fa2 | 2025-06-09 17:08:00 | Lino Galiana | Improve homepage hero banner (#612) |

| 5f615403 | 2025-05-26 12:50:46 | lgaliana | Title and description for embedding chapter (english version) |

| d6b67125 | 2025-05-23 18:03:48 | Lino Galiana | Traduction des chapitres NLP (#603) |

| 24d4ff6d | 2024-12-09 15:22:19 | lgaliana | ensure non executable block |

| 3817cdc5 | 2024-12-09 13:34:56 | lgaliana | eval false pour le dernier exo |

| 8d097424 | 2024-12-09 13:34:16 | lgaliana | update embedding |

| 441da890 | 2024-12-08 20:28:21 | lgaliana | Utilise un service pytorch |

| 0ec1e15c | 2024-12-07 15:54:20 | lgaliana | Commence à décrire l’attention |

| 35443b75 | 2024-12-07 13:40:34 | lgaliana | Word2Vec |

| 89397cf2 | 2024-12-06 21:50:25 | lgaliana | Preprocessing |

| 4181dab1 | 2024-12-06 13:16:36 | lgaliana | Transition |

| 38b5152a | 2024-12-05 22:21:11 | lgaliana | détails sur l’approche proba |

| 5df69ccf | 2024-12-05 17:56:47 | lgaliana | up |

| 1b7188a1 | 2024-12-05 13:21:11 | lgaliana | Embedding chapter |

| c641de05 | 2024-08-22 11:37:13 | Lino Galiana | A series of fix for notebooks that were bugging (#545) |

| c5a9fb7a | 2024-07-22 09:56:18 | Julien PRAMIL | Fix bug in LDA chapter (#525) |

| c9f9f8a7 | 2024-04-24 15:09:35 | Lino Galiana | Dark mode and CSS improvements (#494) |

| 06d003a1 | 2024-04-23 10:09:22 | Lino Galiana | Continue la restructuration des sous-parties (#492) |

| 005d89b8 | 2023-12-20 17:23:04 | Lino Galiana | Finalise l’affichage des statistiques Git (#478) |

| 3437373a | 2023-12-16 20:11:06 | Lino Galiana | Améliore l’exercice sur le LASSO (#473) |

| 4cd44f35 | 2023-12-11 17:37:50 | Antoine Palazzolo | Relecture NLP (#474) |

| deaafb6f | 2023-12-11 13:44:34 | Thomas Faria | Relecture Thomas partie NLP (#472) |

| 1f23de28 | 2023-12-01 17:25:36 | Lino Galiana | Stockage des images sur S3 (#466) |

| 6855667d | 2023-11-29 10:21:01 | Romain Avouac | Corrections tp vectorisation + improve badge creation (#465) |

| 69cf52bd | 2023-11-21 16:12:37 | Antoine Palazzolo | [On-going] Suggestions chapitres modélisation (#452) |

| 652009df | 2023-10-09 13:56:34 | Lino Galiana | Finalise le cleaning (#430) |

| a7711832 | 2023-10-09 11:27:45 | Antoine Palazzolo | Relecture TD2 par Antoine (#418) |

| 154f09e4 | 2023-09-26 14:59:11 | Antoine Palazzolo | Des typos corrigées par Antoine (#411) |

| 9a4e2267 | 2023-08-28 17:11:52 | Lino Galiana | Action to check URL still exist (#399) |

| 3bdf3b06 | 2023-08-25 11:23:02 | Lino Galiana | Simplification de la structure 🤓 (#393) |

| 78ea2cbd | 2023-07-20 20:27:31 | Lino Galiana | Change titles levels (#381) |

| 29ff3f58 | 2023-07-07 14:17:53 | linogaliana | description everywhere |

| f21a24d3 | 2023-07-02 10:58:15 | Lino Galiana | Pipeline Quarto & Pages 🚀 (#365) |

| b3959852 | 2023-02-13 17:29:36 | Lino Galiana | Retire shortcode spoiler (#352) |

| f10815b5 | 2022-08-25 16:00:03 | Lino Galiana | Notebooks should now look more beautiful (#260) |

| d201e3cd | 2022-08-03 15:50:34 | Lino Galiana | Pimp la homepage ✨ (#249) |

| 12965bac | 2022-05-25 15:53:27 | Lino Galiana | :launch: Bascule vers quarto (#226) |

| 9c71d6e7 | 2022-03-08 10:34:26 | Lino Galiana | Plus d’éléments sur S3 (#218) |

| 70587527 | 2022-03-04 15:35:17 | Lino Galiana | Relecture Word2Vec (#216) |

| ce1f2b55 | 2022-02-16 13:54:27 | Lino Galiana | spacy corpus pre-downloaded |

| 66e2837c | 2021-12-24 16:54:45 | Lino Galiana | Fix a few typos in the new pipeline tutorial (#208) |

| 8ab1956a | 2021-12-23 21:07:30 | Romain Avouac | TP vectorization prediction authors (#206) |

| 09b60a18 | 2021-12-21 19:58:58 | Lino Galiana | Relecture suite du NLP (#205) |

| 495599d7 | 2021-12-19 18:33:05 | Lino Galiana | Des éléments supplémentaires dans la partie NLP (#202) |

| 2a8809fb | 2021-10-27 12:05:34 | Lino Galiana | Simplification des hooks pour gagner en flexibilité et clarté (#166) |

| 2e4d5862 | 2021-09-02 12:03:39 | Lino Galiana | Simplify badges generation (#130) |

| 4cdb759c | 2021-05-12 10:37:23 | Lino Galiana | :sparkles: :star2: Nouveau thème hugo :snake: :fire: (#105) |

| 7f9f97bc | 2021-04-30 21:44:04 | Lino Galiana | 🐳 + 🐍 New workflow (docker 🐳) and new dataset for modelization (2020 🇺🇸 elections) (#99) |

| d164635d | 2020-12-08 16:22:00 | Lino Galiana | :books: Première partie NLP (#87) |

References

Footnotes

This remark may seem surprising while generative AIs occupy an important place in our usage. Nevertheless, we must keep in mind that while you ask new questions to AIs, you ask them in terms they know: natural language in a language present in their training corpus, digital images that are therefore interpretable by a machine, etc. In other words, your prompt is not, in itself, unknown to the AI, it can interpret it even if its content is new and original.↩︎

Some organizations, like Meta for Llama, make available the post-training weights of their model on the Hugging Face platform, allowing reuse of these models if the license permits. Nevertheless, these are not open source models since the code used to train the models and constitute the learning corpora, derived from massive data collection by webscraping, and any additional annotations to make specialized versions, are not shared.↩︎

Citation

@book{galiana2025,

author = {Galiana, Lino},

title = {Python Pour La Data Science},

date = {2025},

url = {https://pythonds.linogaliana.fr/},

doi = {10.5281/zenodo.8229676},

langid = {en}

}