!pip install geopandas openpyxl plotnine plotlyL’ensemble de la partie machine learning utilise le même jeu de données, présenté dans l’introduction de cette partie : les données de vote aux élections présidentielles américaines croisées à des variables sociodémographiques. Le code est disponible sur Github.

import requests

url = 'https://raw.githubusercontent.com/linogaliana/python-datascientist/main/content/modelisation/get_data.py'

r = requests.get(url, allow_redirects=True)

open('getdata.py', 'wb').write(r.content)

import getdata

votes = getdata.create_votes_dataframes()Jusqu’à présent, nous avons supposé que les variables utiles à la prévision du vote Républicain étaient connues du modélisateur. Nous n’avons ainsi exploité qu’une partie limitée des variables disponibles dans nos données. Néanmoins, outre le fléau computationnel que représenterait la construction d’un modèle avec un grand nombre de variables, le choix d’un nombre restreint de variables (modèle parcimonieux) limite le risque de sur-apprentissage.

Comment, dès lors, choisir le bon nombre de variables et la meilleure combinaison de ces variables ? Il existe de multiples méthodes, parmi lesquelles :

- se fonder sur des critères statistiques de performance qui pénalisent les modèles non parcimonieux. Par exemple, le BIC.

- techniques de backward elimination.

- construire des modèles pour lesquels la statistique d’intérêt pénalise l’absence de parcimonie (ce que l’on va souhaiter faire ici).

Dans ce chapitre, nous allons présenter les enjeux principaux de la sélection de variables par le biais du LASSO.

Nous allons utiliser par la suite les fonctions ou packages suivants :

import numpy as np

from sklearn.svm import LinearSVC

from sklearn.feature_selection import SelectFromModel

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.impute import SimpleImputer

from sklearn.linear_model import Lasso

import sklearn.metrics

from sklearn.linear_model import LinearRegression

import matplotlib.cm as cm

import matplotlib.pyplot as plt

from sklearn.linear_model import lasso_path

import seaborn as sns1 Principe du LASSO

1.1 Principe général

La classe des modèles de feature selection est ainsi très vaste et regroupe un ensemble très divers de modèles. Nous allons nous focaliser sur le LASSO (Least Absolute Shrinkage and Selection Operator) qui est une extension de la régression linéaire qui vise à sélectionner des modèles sparses. Ce type de modèle est central dans le champ du Compressed sensing (où on emploie plutôt le terme de L1-regularization que de LASSO). Le LASSO est un cas particulier des régressions elastic-net dont un autre cas fameux est la régression ridge. Contrairement à la régression linéaire classique, elles fonctionnent également dans un cadre où \(p>N\), c’est-à-dire où le nombre de régresseurs est très grand puisque supérieur au nombre d’observations.

1.2 Pénalisation

En adoptant le principe d’une fonction objectif pénalisée, le LASSO permet de fixer un certain nombre de coefficients à 0. Les variables dont la norme est non nulle passent ainsi le test de sélection.

Le LASSO est un programme d’optimisation sous contrainte. On cherche à trouver l’estimateur \(\beta\) qui minimise l’erreur quadratique (régression linéaire) sous une contrainte additionnelle régularisant les paramètres: \[ \min_{\beta} \frac{1}{2}\mathbb{E}\bigg( \big( X\beta - y \big)^2 \bigg) \\ \text{s.t. } \sum_{j=1}^p |\beta_j| \leq t \]

Ce programme se reformule grâce au Lagrangien et permet ainsi d’obtenir un programme de minimisation plus maniable :

\[ \beta^{\text{LASSO}} = \arg \min_{\beta} \frac{1}{2}\mathbb{E}\bigg( \big( X\beta - y \big)^2 \bigg) + \alpha \sum_{j=1}^p |\beta_j| = \arg \min_{\beta} ||y-X\beta||_{2}^{2} + \lambda ||\beta||_1 \]

où \(\lambda\) est une réécriture de la régularisation précédente qui dépend de \(\alpha\). La force de la pénalité appliquée aux modèles non parcimonieux dépend de ce paramètre.

1.3 Première régression LASSO

Comme nous cherchons à trouver les meilleurs prédicteurs du vote Républicain, nous allons retirer les variables qui sont dérivables directement de celles-ci : les scores des concurrents !

import pandas as pd

df2 = pd.DataFrame(votes.drop(columns='geometry'))

df2 = df2.loc[

:,

~df2.columns.str.endswith(

('_democrat','_green','_other', 'winner', 'per_point_diff', 'per_dem')

)

]

df2 = df2.loc[:,~df2.columns.duplicated()]Dans le prochain exercice, nous allons utiliser la fonction suivante pour avoir une matrice de corrélation plus esthétique que celle permise par défaut avec Pandas.

Code

import numpy as np

import pandas as pd

import plotly.express as px

def plot_corr_heatmap(

df: pd.DataFrame,

drop_cols=None,

column_labels: dict | None = None,

decimals: int = 2,

width: int = 600,

height: int = 600,

show_xlabels: bool = False

):

"""

Trace une heatmap de corrélation (triangle inférieur) à partir d'un DataFrame.

Paramètres

----------

df : pd.DataFrame

DataFrame d'entrée.

drop_cols : list ou None

Liste de colonnes à supprimer avant le calcul de la corrélation

(ex: ['winner']).

column_labels : dict ou None

Dictionnaire pour renommer les colonnes (ex: column_labels).

decimals : int

Nombre de décimales pour l'arrondi avant corr().

width, height : int

Dimensions de la figure Plotly.

show_xlabels : bool

Afficher ou non les labels en abscisse.

"""

data = df.copy()

# 1. Colonnes à drop

if drop_cols is not None:

data = data.drop(columns=drop_cols)

# 2. Arrondi + renommage éventuel

if column_labels is not None:

data = data.rename(columns=column_labels)

data = data.round(decimals)

# 3. Matrice de corrélation

corr = data.corr()

# 4. Masque triangle supérieur

mask = np.triu(np.ones_like(corr, dtype=bool))

corr_masked = corr.mask(mask)

# 5. Heatmap Plotly

fig = px.imshow(

corr_masked.values,

x=corr.columns,

y=corr.columns,

color_continuous_scale='RdBu_r', # échelle inversée

zmin=-1,

zmax=1,

text_auto=".2f"

)

# 6. Hover custom

fig.update_traces(

hovertemplate="Var 1: %{y}<br>Var 2: %{x}<br>Corr: %{z:.2f}<extra></extra>"

)

# 7. Layout

fig.update_layout(

coloraxis_showscale=False,

xaxis=dict(

showticklabels=show_xlabels,

title=None,

ticks=''

),

yaxis=dict(

showticklabels=show_xlabels,

title=None,

ticks=''

),

plot_bgcolor="rgba(0,0,0,0)",

margin=dict(t=10, b=10, l=10, r=10),

width=width,

height=height

)

return figDans cet exercice, nous utiliserons également une fonction pour extraire les variables sélectionnées par le LASSO, la voici :

Fonction pour récupérer les variables validées par l’étape de sélection

from sklearn.linear_model import Lasso

from sklearn.pipeline import Pipeline

def extract_features_selected(lasso: Pipeline, preprocessing_step_name: str = 'preprocess') -> pd.Series:

"""

Extracts selected features based on the coefficients obtained from Lasso regression.

Parameters:

- lasso (Pipeline): The scikit-learn pipeline containing a trained Lasso regression model.

- preprocessing_step_name (str): The name of the preprocessing step in the pipeline. Default is 'preprocess'.

Returns:

- pd.Series: A Pandas Series containing selected features with non-zero coefficients.

"""

# Check if lasso object is provided

if not isinstance(lasso, Pipeline):

raise ValueError("The provided lasso object is not a scikit-learn pipeline.")

# Extract the final transformer from the pipeline

lasso_model = lasso[-1]

# Check if lasso_model is a Lasso regression model

if not isinstance(lasso_model, Lasso):

raise ValueError("The final step of the pipeline is not a Lasso regression model.")

# Check if lasso model has 'coef_' attribute

if not hasattr(lasso_model, 'coef_'):

raise ValueError("The provided Lasso regression model does not have 'coef_' attribute. "

"Make sure it is a trained Lasso regression model.")

# Get feature names from the preprocessing step

features_preprocessing = lasso[preprocessing_step_name].get_feature_names_out()

# Extract selected features based on non-zero coefficients

features_selec = pd.Series(features_preprocessing[np.abs(lasso_model.coef_) > 0])

return features_selecOn cherche toujours à prédire la variable per_gop. Avant de faire notre estimation, nous allons créer certains objets intermédiaires qui seront utilisés pour

définir notre pipeline:

Dans notre

DataFrame, remplacer les valeurs infinies par desNaN.Créez un échantillon d’entraînement et un échantillon test.

Maintenant nous pouvons passer au coeur de la définition de notre pipeline. Cet exemple pourra servir de source d’inspiration, ainsi que celui-ci.

- Créer en premier lieu les étapes de preprocessing pour notre modèle. Pour cela, il est d’usage de séparer les étapes appliquées aux variables numériques continues des autres variables, dites catégorielles.

- Pour les variables numériques, imputer à la moyenne puis effectuer une standardisation ;

- Pour les variables catégorielles, les techniques de régression linéaires impliquent d’utiliser une expansion par one hot encoding. Avant de faire ce one hot encoding, faire une imputation par valeur la plus fréquente.

- Finaliser le pipeline en ajoutant l’étape d’estimation puis estimer un modèle LASSO pénalisé avec \(\alpha = 0.1\).

En supposant que votre pipeline soit dans un objet nommé pipeline et que la dernière étape

est nommée model, vous pouvez

directement accéder à cette étape en utilisant l’objet pipeline['model'].

- Afficher les valeurs des coefficients. Quelles variables ont une valeur non nulle ?

- Montrer que les variables sélectionnées sont parfois très corrélées.

- Comparer la performance de ce modèle parcimonieux avec celle d’un modèle avec plus de variables.

Aide pour la question 1

# Remplacer les infinis par des NaN

df2.replace([np.inf, -np.inf], np.nan, inplace=True)Aide pour la question 3

La définition d’un pipeline suit la structure suivante :

numeric_pipeline = Pipeline(steps=[

('impute', # définir la méthode d'imputation ici

),

('scale', # définir la méthode de standardisation ici

)

])

categorical_pipeline = # adapter le template

# À vous de définir en amont numerical_features et categorical_features

preprocessor = ColumnTransformer(transformers=[

('number', numeric_pipeline, numerical_features),

('category', categorical_pipeline, categorical_features)

])Le pipeline de preprocessing (question 3) prend la forme suivante :

ColumnTransformer(transformers=[('number',

Pipeline(steps=[('impute', SimpleImputer()),

('scale', StandardScaler())]),

['ALAND', 'AWATER', 'votes_gop', 'votes_dem',

'total_votes', 'diff', 'FIPS_y',

'Rural_Urban_Continuum_Code_2013',

'Rural_Urban_Continuum_Code_2023',

'Urban_Influence_2013',

'Economic_typology_2015', 'CENSUS_2020_POP',

'ESTIMATES_BASE_2020', 'POP_EST...

'DEATHS_2020', 'DEATHS_2021', 'DEATHS_2022',

'DEATHS_2023', 'NATURAL_CHG_2020', ...]),

('category',

Pipeline(steps=[('impute',

SimpleImputer(strategy='most_frequent')),

('one-hot',

OneHotEncoder(handle_unknown='ignore',

sparse_output=False))]),

['STATEFP', 'COUNTYFP', 'COUNTYNS', 'AFFGEOID',

'GEOID', 'NAME', 'LSAD', 'FIPS_x',

'state_name', 'county_fips', 'county_name',

'State', 'Area_Name', 'FIPS'])])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

['ALAND', 'AWATER', 'votes_gop', 'votes_dem', 'total_votes', 'diff', 'FIPS_y', 'Rural_Urban_Continuum_Code_2013', 'Rural_Urban_Continuum_Code_2023', 'Urban_Influence_2013', 'Economic_typology_2015', 'CENSUS_2020_POP', 'ESTIMATES_BASE_2020', 'POP_ESTIMATE_2020', 'POP_ESTIMATE_2021', 'POP_ESTIMATE_2022', 'POP_ESTIMATE_2023', 'N_POP_CHG_2020', 'N_POP_CHG_2021', 'N_POP_CHG_2022', 'N_POP_CHG_2023', 'BIRTHS_2020', 'BIRTHS_2021', 'BIRTHS_2022', 'BIRTHS_2023', 'DEATHS_2020', 'DEATHS_2021', 'DEATHS_2022', 'DEATHS_2023', 'NATURAL_CHG_2020', 'NATURAL_CHG_2021', 'NATURAL_CHG_2022', 'NATURAL_CHG_2023', 'INTERNATIONAL_MIG_2020', 'INTERNATIONAL_MIG_2021', 'INTERNATIONAL_MIG_2022', 'INTERNATIONAL_MIG_2023', 'DOMESTIC_MIG_2020', 'DOMESTIC_MIG_2021', 'DOMESTIC_MIG_2022', 'DOMESTIC_MIG_2023', 'NET_MIG_2020', 'NET_MIG_2021', 'NET_MIG_2022', 'NET_MIG_2023', 'RESIDUAL_2020', 'RESIDUAL_2021', 'RESIDUAL_2022', 'RESIDUAL_2023', 'GQ_ESTIMATES_BASE_2020', 'GQ_ESTIMATES_2020', 'GQ_ESTIMATES_2021', 'GQ_ESTIMATES_2022', 'GQ_ESTIMATES_2023', 'R_BIRTH_2021', 'R_BIRTH_2022', 'R_BIRTH_2023', 'R_DEATH_2021', 'R_DEATH_2022', 'R_DEATH_2023', 'R_NATURAL_CHG_2021', 'R_NATURAL_CHG_2022', 'R_NATURAL_CHG_2023', 'R_INTERNATIONAL_MIG_2021', 'R_INTERNATIONAL_MIG_2022', 'R_INTERNATIONAL_MIG_2023', 'R_DOMESTIC_MIG_2021', 'R_DOMESTIC_MIG_2022', 'R_DOMESTIC_MIG_2023', 'R_NET_MIG_2021', 'R_NET_MIG_2022', 'R_NET_MIG_2023', '2003 Urban Influence Code', '2013 Urban Influence Code', '2013 Rural-urban Continuum Code', '2023 Rural-urban Continuum Code', 'Less than a high school diploma, 1970', 'High school diploma only, 1970', 'Some college (1-3 years), 1970', 'Four years of college or higher, 1970', 'Percent of adults with less than a high school diploma, 1970', 'Percent of adults with a high school diploma only, 1970', 'Percent of adults completing some college (1-3 years), 1970', 'Percent of adults completing four years of college or higher, 1970', 'Less than a high school diploma, 1980', 'High school diploma only, 1980', 'Some college (1-3 years), 1980', 'Four years of college or higher, 1980', 'Percent of adults with less than a high school diploma, 1980', 'Percent of adults with a high school diploma only, 1980', 'Percent of adults completing some college (1-3 years), 1980', 'Percent of adults completing four years of college or higher, 1980', 'Less than a high school diploma, 1990', 'High school diploma only, 1990', "Some college or associate's degree, 1990", "Bachelor's degree or higher, 1990", 'Percent of adults with less than a high school diploma, 1990', 'Percent of adults with a high school diploma only, 1990', "Percent of adults completing some college or associate's degree, 1990", "Percent of adults with a bachelor's degree or higher, 1990", 'Less than a high school diploma, 2000', 'High school diploma only, 2000', "Some college or associate's degree, 2000", "Bachelor's degree or higher, 2000", 'Percent of adults with less than a high school diploma, 2000', 'Percent of adults with a high school diploma only, 2000', "Percent of adults completing some college or associate's degree, 2000", "Percent of adults with a bachelor's degree or higher, 2000", 'Less than a high school diploma, 2008-12', 'High school diploma only, 2008-12', "Some college or associate's degree, 2008-12", "Bachelor's degree or higher, 2008-12", 'Percent of adults with less than a high school diploma, 2008-12', 'Percent of adults with a high school diploma only, 2008-12', "Percent of adults completing some college or associate's degree, 2008-12", "Percent of adults with a bachelor's degree or higher, 2008-12", 'Less than a high school diploma, 2018-22', 'High school diploma only, 2018-22', "Some college or associate's degree, 2018-22", "Bachelor's degree or higher, 2018-22", 'Percent of adults with less than a high school diploma, 2018-22', 'Percent of adults with a high school diploma only, 2018-22', "Percent of adults completing some college or associate's degree, 2018-22", "Percent of adults with a bachelor's degree or higher, 2018-22", 'Urban_Influence_Code_2013', 'Metro_2013', 'Civilian_labor_force_2000', 'Employed_2000', 'Unemployed_2000', 'Unemployment_rate_2000', 'Civilian_labor_force_2001', 'Employed_2001', 'Unemployed_2001', 'Unemployment_rate_2001', 'Civilian_labor_force_2002', 'Employed_2002', 'Unemployed_2002', 'Unemployment_rate_2002', 'Civilian_labor_force_2003', 'Employed_2003', 'Unemployed_2003', 'Unemployment_rate_2003', 'Civilian_labor_force_2004', 'Employed_2004', 'Unemployed_2004', 'Unemployment_rate_2004', 'Civilian_labor_force_2005', 'Employed_2005', 'Unemployed_2005', 'Unemployment_rate_2005', 'Civilian_labor_force_2006', 'Employed_2006', 'Unemployed_2006', 'Unemployment_rate_2006', 'Civilian_labor_force_2007', 'Employed_2007', 'Unemployed_2007', 'Unemployment_rate_2007', 'Civilian_labor_force_2008', 'Employed_2008', 'Unemployed_2008', 'Unemployment_rate_2008', 'Civilian_labor_force_2009', 'Employed_2009', 'Unemployed_2009', 'Unemployment_rate_2009', 'Civilian_labor_force_2010', 'Employed_2010', 'Unemployed_2010', 'Unemployment_rate_2010', 'Civilian_labor_force_2011', 'Employed_2011', 'Unemployed_2011', 'Unemployment_rate_2011', 'Civilian_labor_force_2012', 'Employed_2012', 'Unemployed_2012', 'Unemployment_rate_2012', 'Civilian_labor_force_2013', 'Employed_2013', 'Unemployed_2013', 'Unemployment_rate_2013', 'Civilian_labor_force_2014', 'Employed_2014', 'Unemployed_2014', 'Unemployment_rate_2014', 'Civilian_labor_force_2015', 'Employed_2015', 'Unemployed_2015', 'Unemployment_rate_2015', 'Civilian_labor_force_2016', 'Employed_2016', 'Unemployed_2016', 'Unemployment_rate_2016', 'Civilian_labor_force_2017', 'Employed_2017', 'Unemployed_2017', 'Unemployment_rate_2017', 'Civilian_labor_force_2018', 'Employed_2018', 'Unemployed_2018', 'Unemployment_rate_2018', 'Civilian_labor_force_2019', 'Employed_2019', 'Unemployed_2019', 'Unemployment_rate_2019', 'Civilian_labor_force_2020', 'Employed_2020', 'Unemployed_2020', 'Unemployment_rate_2020', 'Civilian_labor_force_2021', 'Employed_2021', 'Unemployed_2021', 'Unemployment_rate_2021', 'Civilian_labor_force_2022', 'Employed_2022', 'Unemployed_2022', 'Unemployment_rate_2022', 'Median_Household_Income_2021', 'Med_HH_Income_Percent_of_State_Total_2021', 'Rural-urban_Continuum_Code_2003', 'Urban_Influence_Code_2003', 'Rural-urban_Continuum_Code_2013', 'Urban_Influence_Code_ 2013', 'POVALL_2021', 'CI90LBALL_2021', 'CI90UBALL_2021', 'PCTPOVALL_2021', 'CI90LBALLP_2021', 'CI90UBALLP_2021', 'POV017_2021', 'CI90LB017_2021', 'CI90UB017_2021', 'PCTPOV017_2021', 'CI90LB017P_2021', 'CI90UB017P_2021', 'POV517_2021', 'CI90LB517_2021', 'CI90UB517_2021', 'PCTPOV517_2021', 'CI90LB517P_2021', 'CI90UB517P_2021', 'MEDHHINC_2021', 'CI90LBINC_2021', 'CI90UBINC_2021', 'POV04_2021', 'CI90LB04_2021', 'CI90UB04_2021', 'PCTPOV04_2021', 'CI90LB04P_2021', 'CI90UB04P_2021', 'candidatevotes_2000_republican', 'candidatevotes_2004_republican', 'candidatevotes_2008_republican', 'candidatevotes_2012_republican', 'candidatevotes_2016_republican', 'share_2000_republican', 'share_2004_republican', 'share_2008_republican', 'share_2012_republican', 'share_2016_republican']

Parameters

Parameters

['STATEFP', 'COUNTYFP', 'COUNTYNS', 'AFFGEOID', 'GEOID', 'NAME', 'LSAD', 'FIPS_x', 'state_name', 'county_fips', 'county_name', 'State', 'Area_Name', 'FIPS']

Parameters

Parameters

Le pipeline prend la forme suivante, une fois finalisé (question 4) :

Pipeline(steps=[('preprocess',

ColumnTransformer(transformers=[('number',

Pipeline(steps=[('impute',

SimpleImputer()),

('scale',

StandardScaler())]),

['ALAND', 'AWATER',

'votes_gop', 'votes_dem',

'total_votes', 'diff',

'FIPS_y',

'Rural_Urban_Continuum_Code_2013',

'Rural_Urban_Continuum_Code_2023',

'Urban_Influence_2013',

'Economic_typology_2015',

'CENSUS_2020_POP',...

'NATURAL_CHG_2020', ...]),

('category',

Pipeline(steps=[('impute',

SimpleImputer(strategy='most_frequent')),

('one-hot',

OneHotEncoder(handle_unknown='ignore',

sparse_output=False))]),

['STATEFP', 'COUNTYFP',

'COUNTYNS', 'AFFGEOID',

'GEOID', 'NAME', 'LSAD',

'FIPS_x', 'state_name',

'county_fips', 'county_name',

'State', 'Area_Name',

'FIPS'])])),

('model', Lasso(alpha=0.1))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

Parameters

['ALAND', 'AWATER', 'votes_gop', 'votes_dem', 'total_votes', 'diff', 'FIPS_y', 'Rural_Urban_Continuum_Code_2013', 'Rural_Urban_Continuum_Code_2023', 'Urban_Influence_2013', 'Economic_typology_2015', 'CENSUS_2020_POP', 'ESTIMATES_BASE_2020', 'POP_ESTIMATE_2020', 'POP_ESTIMATE_2021', 'POP_ESTIMATE_2022', 'POP_ESTIMATE_2023', 'N_POP_CHG_2020', 'N_POP_CHG_2021', 'N_POP_CHG_2022', 'N_POP_CHG_2023', 'BIRTHS_2020', 'BIRTHS_2021', 'BIRTHS_2022', 'BIRTHS_2023', 'DEATHS_2020', 'DEATHS_2021', 'DEATHS_2022', 'DEATHS_2023', 'NATURAL_CHG_2020', 'NATURAL_CHG_2021', 'NATURAL_CHG_2022', 'NATURAL_CHG_2023', 'INTERNATIONAL_MIG_2020', 'INTERNATIONAL_MIG_2021', 'INTERNATIONAL_MIG_2022', 'INTERNATIONAL_MIG_2023', 'DOMESTIC_MIG_2020', 'DOMESTIC_MIG_2021', 'DOMESTIC_MIG_2022', 'DOMESTIC_MIG_2023', 'NET_MIG_2020', 'NET_MIG_2021', 'NET_MIG_2022', 'NET_MIG_2023', 'RESIDUAL_2020', 'RESIDUAL_2021', 'RESIDUAL_2022', 'RESIDUAL_2023', 'GQ_ESTIMATES_BASE_2020', 'GQ_ESTIMATES_2020', 'GQ_ESTIMATES_2021', 'GQ_ESTIMATES_2022', 'GQ_ESTIMATES_2023', 'R_BIRTH_2021', 'R_BIRTH_2022', 'R_BIRTH_2023', 'R_DEATH_2021', 'R_DEATH_2022', 'R_DEATH_2023', 'R_NATURAL_CHG_2021', 'R_NATURAL_CHG_2022', 'R_NATURAL_CHG_2023', 'R_INTERNATIONAL_MIG_2021', 'R_INTERNATIONAL_MIG_2022', 'R_INTERNATIONAL_MIG_2023', 'R_DOMESTIC_MIG_2021', 'R_DOMESTIC_MIG_2022', 'R_DOMESTIC_MIG_2023', 'R_NET_MIG_2021', 'R_NET_MIG_2022', 'R_NET_MIG_2023', '2003 Urban Influence Code', '2013 Urban Influence Code', '2013 Rural-urban Continuum Code', '2023 Rural-urban Continuum Code', 'Less than a high school diploma, 1970', 'High school diploma only, 1970', 'Some college (1-3 years), 1970', 'Four years of college or higher, 1970', 'Percent of adults with less than a high school diploma, 1970', 'Percent of adults with a high school diploma only, 1970', 'Percent of adults completing some college (1-3 years), 1970', 'Percent of adults completing four years of college or higher, 1970', 'Less than a high school diploma, 1980', 'High school diploma only, 1980', 'Some college (1-3 years), 1980', 'Four years of college or higher, 1980', 'Percent of adults with less than a high school diploma, 1980', 'Percent of adults with a high school diploma only, 1980', 'Percent of adults completing some college (1-3 years), 1980', 'Percent of adults completing four years of college or higher, 1980', 'Less than a high school diploma, 1990', 'High school diploma only, 1990', "Some college or associate's degree, 1990", "Bachelor's degree or higher, 1990", 'Percent of adults with less than a high school diploma, 1990', 'Percent of adults with a high school diploma only, 1990', "Percent of adults completing some college or associate's degree, 1990", "Percent of adults with a bachelor's degree or higher, 1990", 'Less than a high school diploma, 2000', 'High school diploma only, 2000', "Some college or associate's degree, 2000", "Bachelor's degree or higher, 2000", 'Percent of adults with less than a high school diploma, 2000', 'Percent of adults with a high school diploma only, 2000', "Percent of adults completing some college or associate's degree, 2000", "Percent of adults with a bachelor's degree or higher, 2000", 'Less than a high school diploma, 2008-12', 'High school diploma only, 2008-12', "Some college or associate's degree, 2008-12", "Bachelor's degree or higher, 2008-12", 'Percent of adults with less than a high school diploma, 2008-12', 'Percent of adults with a high school diploma only, 2008-12', "Percent of adults completing some college or associate's degree, 2008-12", "Percent of adults with a bachelor's degree or higher, 2008-12", 'Less than a high school diploma, 2018-22', 'High school diploma only, 2018-22', "Some college or associate's degree, 2018-22", "Bachelor's degree or higher, 2018-22", 'Percent of adults with less than a high school diploma, 2018-22', 'Percent of adults with a high school diploma only, 2018-22', "Percent of adults completing some college or associate's degree, 2018-22", "Percent of adults with a bachelor's degree or higher, 2018-22", 'Urban_Influence_Code_2013', 'Metro_2013', 'Civilian_labor_force_2000', 'Employed_2000', 'Unemployed_2000', 'Unemployment_rate_2000', 'Civilian_labor_force_2001', 'Employed_2001', 'Unemployed_2001', 'Unemployment_rate_2001', 'Civilian_labor_force_2002', 'Employed_2002', 'Unemployed_2002', 'Unemployment_rate_2002', 'Civilian_labor_force_2003', 'Employed_2003', 'Unemployed_2003', 'Unemployment_rate_2003', 'Civilian_labor_force_2004', 'Employed_2004', 'Unemployed_2004', 'Unemployment_rate_2004', 'Civilian_labor_force_2005', 'Employed_2005', 'Unemployed_2005', 'Unemployment_rate_2005', 'Civilian_labor_force_2006', 'Employed_2006', 'Unemployed_2006', 'Unemployment_rate_2006', 'Civilian_labor_force_2007', 'Employed_2007', 'Unemployed_2007', 'Unemployment_rate_2007', 'Civilian_labor_force_2008', 'Employed_2008', 'Unemployed_2008', 'Unemployment_rate_2008', 'Civilian_labor_force_2009', 'Employed_2009', 'Unemployed_2009', 'Unemployment_rate_2009', 'Civilian_labor_force_2010', 'Employed_2010', 'Unemployed_2010', 'Unemployment_rate_2010', 'Civilian_labor_force_2011', 'Employed_2011', 'Unemployed_2011', 'Unemployment_rate_2011', 'Civilian_labor_force_2012', 'Employed_2012', 'Unemployed_2012', 'Unemployment_rate_2012', 'Civilian_labor_force_2013', 'Employed_2013', 'Unemployed_2013', 'Unemployment_rate_2013', 'Civilian_labor_force_2014', 'Employed_2014', 'Unemployed_2014', 'Unemployment_rate_2014', 'Civilian_labor_force_2015', 'Employed_2015', 'Unemployed_2015', 'Unemployment_rate_2015', 'Civilian_labor_force_2016', 'Employed_2016', 'Unemployed_2016', 'Unemployment_rate_2016', 'Civilian_labor_force_2017', 'Employed_2017', 'Unemployed_2017', 'Unemployment_rate_2017', 'Civilian_labor_force_2018', 'Employed_2018', 'Unemployed_2018', 'Unemployment_rate_2018', 'Civilian_labor_force_2019', 'Employed_2019', 'Unemployed_2019', 'Unemployment_rate_2019', 'Civilian_labor_force_2020', 'Employed_2020', 'Unemployed_2020', 'Unemployment_rate_2020', 'Civilian_labor_force_2021', 'Employed_2021', 'Unemployed_2021', 'Unemployment_rate_2021', 'Civilian_labor_force_2022', 'Employed_2022', 'Unemployed_2022', 'Unemployment_rate_2022', 'Median_Household_Income_2021', 'Med_HH_Income_Percent_of_State_Total_2021', 'Rural-urban_Continuum_Code_2003', 'Urban_Influence_Code_2003', 'Rural-urban_Continuum_Code_2013', 'Urban_Influence_Code_ 2013', 'POVALL_2021', 'CI90LBALL_2021', 'CI90UBALL_2021', 'PCTPOVALL_2021', 'CI90LBALLP_2021', 'CI90UBALLP_2021', 'POV017_2021', 'CI90LB017_2021', 'CI90UB017_2021', 'PCTPOV017_2021', 'CI90LB017P_2021', 'CI90UB017P_2021', 'POV517_2021', 'CI90LB517_2021', 'CI90UB517_2021', 'PCTPOV517_2021', 'CI90LB517P_2021', 'CI90UB517P_2021', 'MEDHHINC_2021', 'CI90LBINC_2021', 'CI90UBINC_2021', 'POV04_2021', 'CI90LB04_2021', 'CI90UB04_2021', 'PCTPOV04_2021', 'CI90LB04P_2021', 'CI90UB04P_2021', 'candidatevotes_2000_republican', 'candidatevotes_2004_republican', 'candidatevotes_2008_republican', 'candidatevotes_2012_republican', 'candidatevotes_2016_republican', 'share_2000_republican', 'share_2004_republican', 'share_2008_republican', 'share_2012_republican', 'share_2016_republican']

Parameters

Parameters

['STATEFP', 'COUNTYFP', 'COUNTYNS', 'AFFGEOID', 'GEOID', 'NAME', 'LSAD', 'FIPS_x', 'state_name', 'county_fips', 'county_name', 'State', 'Area_Name', 'FIPS']

Parameters

Parameters

Parameters

À l’issue de la question 5, les variables sélectionnées sont :

Le modèle est assez parcimonieux puisqu’un sous-échantillon de nos variables initiales (d’autant que nos variables catégorielles ont été éclatées en de nombreuses variables par le one hot encoding).

| selected | |

|---|---|

| 0 | ALAND |

| 1 | FIPS_y |

| 2 | Rural_Urban_Continuum_Code_2023 |

| 3 | N_POP_CHG_2020 |

| 4 | INTERNATIONAL_MIG_2023 |

| 5 | DOMESTIC_MIG_2023 |

| 6 | RESIDUAL_2020 |

| 7 | RESIDUAL_2021 |

| 8 | 2023 Rural-urban Continuum Code |

| 9 | Percent of adults with a bachelor's degree or ... |

| 10 | Percent of adults with a high school diploma o... |

| 11 | Percent of adults with a bachelor's degree or ... |

| 12 | Percent of adults with less than a high school... |

| 13 | Percent of adults with a bachelor's degree or ... |

| 14 | Metro_2013 |

| 15 | Unemployment_rate_2000 |

| 16 | Unemployment_rate_2002 |

| 17 | Unemployment_rate_2003 |

| 18 | Unemployment_rate_2012 |

| 19 | Unemployment_rate_2014 |

| 20 | Rural-urban_Continuum_Code_2003 |

| 21 | Rural-urban_Continuum_Code_2013 |

| 22 | CI90LB017P_2021 |

| 23 | CI90LB517P_2021 |

| 24 | candidatevotes_2016_republican |

| 25 | share_2012_republican |

| 26 | share_2016_republican |

Certaines variables font sens, comme les variables d’éducation par exemple. Notamment, un des meilleurs prédicteurs pour le score des Républicains en 2020 est… le score des Républicains (et mécaniquement des démocrates) en 2016 et 2012.

Par ailleurs, on sélectionne des variables redondantes. Une phase plus approfondie de nettoyage des données serait en réalité nécessaire.

Le modèle parcimonieux est (légèrement) plus performant :

| parcimonieux | non parcimonieux | |

|---|---|---|

| RMSE | 2.699583 | 2.491548 |

| R2 | 0.972809 | 0.976838 |

| Nombre de paramètres | 27.000000 | 256.000000 |

D’ailleurs, on pourrait déjà remarquer que régresser le score de 2020 sur celui de 2016 amène déjà à de très bonnes performances explicatives, ce qui suggère que le vote se comporte comme un processus autorégressif :

import statsmodels.api as sm

import statsmodels.formula.api as smf

smf.ols("per_gop ~ share_2016_republican", data = df2).fit().summary()| Dep. Variable: | per_gop | R-squared: | 0.968 |

|---|---|---|---|

| Model: | OLS | Adj. R-squared: | 0.968 |

| Method: | Least Squares | F-statistic: | 9.292e+04 |

| Date: | Sun, 11 Jan 2026 | Prob (F-statistic): | 0.00 |

| Time: | 21:13:29 | Log-Likelihood: | 6603.5 |

| No. Observations: | 3107 | AIC: | -1.320e+04 |

| Df Residuals: | 3105 | BIC: | -1.319e+04 |

| Df Model: | 1 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| Intercept | 0.0109 | 0.002 | 5.056 | 0.000 | 0.007 | 0.015 |

| share_2016_republican | 1.0101 | 0.003 | 304.835 | 0.000 | 1.004 | 1.017 |

| Omnibus: | 2045.232 | Durbin-Watson: | 1.982 |

|---|---|---|---|

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 51553.266 |

| Skew: | 2.731 | Prob(JB): | 0.00 |

| Kurtosis: | 22.193 | Cond. No. | 9.00 |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

2 Rôle de la pénalisation \(\alpha\) sur la sélection de variables

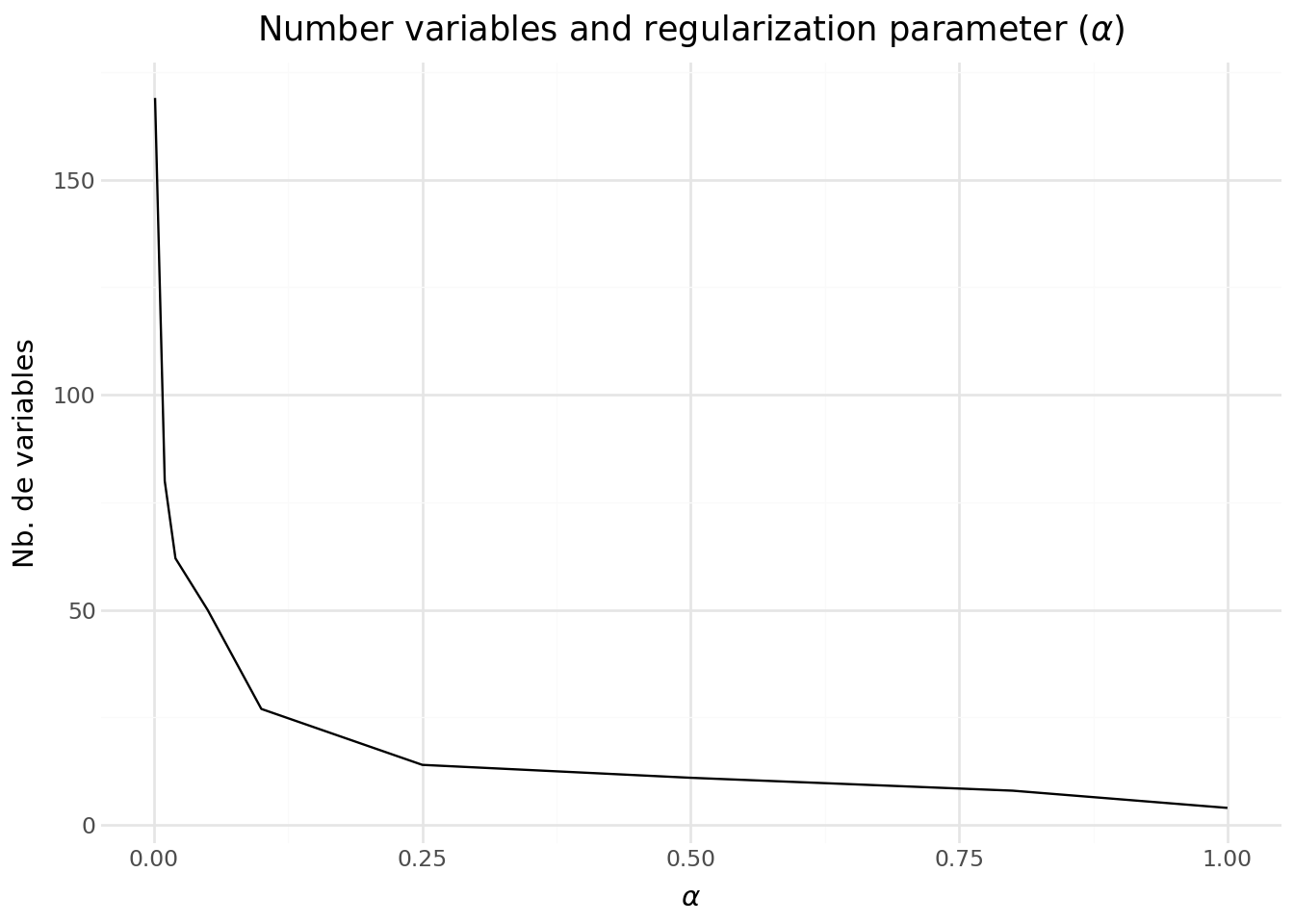

Nous avons jusqu’à présent pris l’hyperparamètre \(\alpha\) comme donné. Quel rôle joue-t-il dans les conclusions de notre modélisation ? Pour cela, nous pouvons explorer l’effet que sa valeur peut avoir sur le nombre de variables passant l’étape de sélection.

Pour le prochain exercice, nous allons considérer exclusivement les variables quantitatives pour accélérer les calculs. En effet, avec des modèles non parcimonieux, les multiples modalités de nos variables catégorielles rendent le problème d’optimisation difficilement tractable.

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import StandardScaler

df2.replace([np.inf, -np.inf], np.nan, inplace=True)

X_train, X_test, y_train, y_test = train_test_split(

df2.drop(["per_gop"], axis = 1),

100*df2['per_gop'], test_size=0.2, random_state=0

)

numerical_features = X_train.select_dtypes(include='number').columns.tolist()

categorical_features = X_train.select_dtypes(exclude='number').columns.tolist()

numeric_pipeline = Pipeline(steps=[

('impute', SimpleImputer(strategy='mean')),

('scale', StandardScaler())

])

preprocessed_features = pd.DataFrame(

numeric_pipeline.fit_transform(

X_train.drop(columns = categorical_features)

)

)

numeric_pipeline Utiliser la fonction lasso_path pour évaluer le nombre de paramètres sélectionnés par LASSO lorsque \(\alpha\)

varie (parcourir \(\alpha \in [0.001,0.01,0.02,0.025,0.05,0.1,0.25,0.5,0.8,1.0]\)).

La relation que vous devriez obtenir entre \(\alpha\) et le nombre de paramètres est celle-ci :

On voit que plus \(\alpha\) est élevé, moins le modèle sélectionne de variables.

3 Validation croisée pour sélectionner le modèle

Quel \(\alpha\) faut-il privilégier ? Pour cela, il convient d’effectuer une validation croisée afin de choisir le modèle pour lequel les variables qui passent la phase de sélection permettent de mieux prédire le résultat Républicain.

from sklearn.linear_model import LassoCV

my_alphas = np.array([0.001,0.01,0.02,0.025,0.05,0.1,0.25,0.5,0.8,1.0])

lcv = (

LassoCV(

alphas=my_alphas,

fit_intercept=False,

random_state=0,

cv=5

).fit(

preprocessed_features, y_train

)

)On peut récupérer le “meilleur” \(\alpha\) :

print("alpha optimal :", lcv.alpha_)alpha optimal : 1.0Celui-ci peut être utilisé pour faire tourner un nouveau pipeline :

from sklearn.compose import make_column_transformer, ColumnTransformer

from sklearn.preprocessing import OneHotEncoder, StandardScaler

numeric_pipeline = Pipeline(steps=[

('impute', SimpleImputer(strategy='mean')),

('scale', StandardScaler())

])

categorical_pipeline = Pipeline(steps=[

('impute', SimpleImputer(strategy='most_frequent')),

('one-hot', OneHotEncoder(handle_unknown='ignore', sparse_output=False))

])

preprocessor = ColumnTransformer(transformers=[

('number', numeric_pipeline, numerical_features),

('category', categorical_pipeline, categorical_features)

])

model = Lasso(

fit_intercept=False,

alpha = lcv.alpha_

)

lasso_pipeline = Pipeline(steps=[

('preprocess', preprocessor),

('model', model)

])

lasso_optimal = lasso_pipeline.fit(X_train,y_train)

features_selec2 = extract_features_selected(lasso_optimal)Les variables sélectionnées sont :

Selected features are:

| selected | |

|---|---|

| 0 | R_BIRTH_2021 |

| 1 | R_DEATH_2023 |

| 2 | Percent of adults completing some college or a... |

| 3 | Percent of adults with a bachelor's degree or ... |

| 4 | Percent of adults with a bachelor's degree or ... |

| 5 | Percent of adults with a high school diploma o... |

| 6 | CI90LBINC_2021 |

| 7 | candidatevotes_2016_republican |

| 8 | share_2008_republican |

| 9 | share_2012_republican |

| 10 | share_2016_republican |

| 11 | STATEFP_22 |

| 12 | LSAD_06 |

| 13 | LSAD_15 |

| 14 | state_name_Louisiana |

Cela correspond à un modèle avec 15 variables sélectionnées.

Dans le cas où le modèle paraîtrait trop peu parcimonieux, il faudrait revoir la phase de définition des variables pertinentes pour comprendre si des échelles différentes de certaines variables ne seraient pas plus appropriées (par exemple du log).

Informations additionnelles

Ce site a été construit automatiquement par le biais d’une action Github utilisant le logiciel de publication reproductible Quarto

L’environnement utilisé pour obtenir les résultats est reproductible par le biais d’uv. Le fichier pyproject.toml utilisé pour construire cet environnement est disponible sur le dépôt linogaliana/python-datascientist

pyproject.toml

[project]

name = "python-datascientist"

version = "0.1.0"

description = "Source code for Lino Galiana's Python for data science course"

readme = "README.md"

requires-python = ">=3.13,<3.14"

dependencies = [

"altair>=6.0.0",

"black==24.8.0",

"cartiflette",

"contextily==1.6.2",

"duckdb>=0.10.1",

"folium>=0.19.6",

"gdal!=3.11.1",

"geoplot==0.5.1",

"graphviz==0.20.3",

"great-tables>=0.12.0",

"gt-extras>=0.0.8",

"ipykernel>=6.29.5",

"jupyter>=1.1.1",

"jupyter-cache==1.0.0",

"kaleido==0.2.1",

"langchain-community>=0.3.27",

"loguru==0.7.3",

"markdown>=3.8",

"nbclient==0.10.0",

"nbformat==5.10.4",

"nltk>=3.9.1",

"pip>=25.1.1",

"plotly>=6.1.2",

"plotnine>=0.15",

"polars==1.8.2",

"pyarrow>=17.0.0",

"pynsee==0.1.8",

"python-dotenv==1.0.1",

"python-frontmatter>=1.1.0",

"pywaffle==1.1.1",

"requests>=2.32.3",

"scikit-image==0.24.0",

"scipy>=1.13.0",

"selenium<4.39.0",

"spacy>=3.8.4",

"webdriver-manager==4.0.2",

"wordcloud==1.9.3",

]

[tool.uv.sources]

cartiflette = { git = "https://github.com/inseefrlab/cartiflette" }

gdal = [

{ index = "gdal-wheels", marker = "sys_platform == 'linux'" },

{ index = "geospatial_wheels", marker = "sys_platform == 'win32'" },

]

[[tool.uv.index]]

name = "geospatial_wheels"

url = "https://nathanjmcdougall.github.io/geospatial-wheels-index/"

explicit = true

[[tool.uv.index]]

name = "gdal-wheels"

url = "https://gitlab.com/api/v4/projects/61637378/packages/pypi/simple"

explicit = true

[dependency-groups]

dev = [

"nb-clean>=4.0.1",

]

Pour utiliser exactement le même environnement (version de Python et packages), se reporter à la documentation d’uv.

| SHA | Date | Author | Description |

|---|---|---|---|

| e68c62de | 2025-11-27 21:31:06 | lgaliana | Fix feature selection tutorial |

| d56f6e9e | 2025-11-25 08:30:59 | lgaliana | Un petit coup de neuf sur les consignes et corrections pandas related |

| 94648290 | 2025-07-22 18:57:48 | Lino Galiana | Fix boxes now that it is better supported by jupyter (#628) |

| 91431fa2 | 2025-06-09 17:08:00 | Lino Galiana | Improve homepage hero banner (#612) |

| 48dccf14 | 2025-01-14 21:45:34 | lgaliana | Fix bug in modeling section |

| 8c8ca4c0 | 2024-12-20 10:45:00 | lgaliana | Traduction du chapitre clustering |

| a5ecaedc | 2024-12-20 09:36:42 | Lino Galiana | Traduction du chapitre modélisation (#582) |

| 5ff770b5 | 2024-12-04 10:07:34 | lgaliana | Partie ML plus esthétique |

| d2422572 | 2024-08-22 18:51:51 | Lino Galiana | At this point, notebooks should now all be functional ! (#547) |

| c641de05 | 2024-08-22 11:37:13 | Lino Galiana | A series of fix for notebooks that were bugging (#545) |

| 0908656f | 2024-08-20 16:30:39 | Lino Galiana | English sidebar (#542) |

| 06d003a1 | 2024-04-23 10:09:22 | Lino Galiana | Continue la restructuration des sous-parties (#492) |

| 8c316d0a | 2024-04-05 19:00:59 | Lino Galiana | Fix cartiflette deprecated snippets (#487) |

| 005d89b8 | 2023-12-20 17:23:04 | Lino Galiana | Finalise l’affichage des statistiques Git (#478) |

| 3437373a | 2023-12-16 20:11:06 | Lino Galiana | Améliore l’exercice sur le LASSO (#473) |

| 7d12af8b | 2023-12-05 10:30:08 | linogaliana | Modularise la partie import pour l’avoir partout |

| 417fb669 | 2023-12-04 18:49:21 | Lino Galiana | Corrections partie ML (#468) |

| 0b405bc2 | 2023-11-27 20:58:37 | Lino Galiana | Update box lasso |

| a06a2689 | 2023-11-23 18:23:28 | Antoine Palazzolo | 2ème relectures chapitres ML (#457) |

| 889a71ba | 2023-11-10 11:40:51 | Antoine Palazzolo | Modification TP 3 (#443) |

| 9a4e2267 | 2023-08-28 17:11:52 | Lino Galiana | Action to check URL still exist (#399) |

| a8f90c2f | 2023-08-28 09:26:12 | Lino Galiana | Update featured paths (#396) |

| 3bdf3b06 | 2023-08-25 11:23:02 | Lino Galiana | Simplification de la structure 🤓 (#393) |

| 78ea2cbd | 2023-07-20 20:27:31 | Lino Galiana | Change titles levels (#381) |

| 29ff3f58 | 2023-07-07 14:17:53 | linogaliana | description everywhere |

| f21a24d3 | 2023-07-02 10:58:15 | Lino Galiana | Pipeline Quarto & Pages 🚀 (#365) |

| e12187b2 | 2023-06-12 10:31:40 | Lino Galiana | Feature selection deprecated functions (#363) |

| f5ad0210 | 2022-11-15 17:40:16 | Lino Galiana | Relec clustering et lasso (#322) |

| f10815b5 | 2022-08-25 16:00:03 | Lino Galiana | Notebooks should now look more beautiful (#260) |

| 494a85ae | 2022-08-05 14:49:56 | Lino Galiana | Images featured ✨ (#252) |

| d201e3cd | 2022-08-03 15:50:34 | Lino Galiana | Pimp la homepage ✨ (#249) |

| 12965bac | 2022-05-25 15:53:27 | Lino Galiana | :launch: Bascule vers quarto (#226) |

| 9c71d6e7 | 2022-03-08 10:34:26 | Lino Galiana | Plus d’éléments sur S3 (#218) |

| 70587527 | 2022-03-04 15:35:17 | Lino Galiana | Relecture Word2Vec (#216) |

| c3bf4d42 | 2021-12-06 19:43:26 | Lino Galiana | Finalise debug partie ML (#190) |

| fb14d406 | 2021-12-06 17:00:52 | Lino Galiana | Modifie l’import du script (#187) |

| 37ecfa3c | 2021-12-06 14:48:05 | Lino Galiana | Essaye nom différent (#186) |

| 2c8fd0dd | 2021-12-06 13:06:36 | Lino Galiana | Problème d’exécution du script import data ML (#185) |

| 5d0a5e38 | 2021-12-04 07:41:43 | Lino Galiana | MAJ URL script recup data (#184) |

| 5c104904 | 2021-12-03 17:44:08 | Lino Galiana | Relec @antuki partie modelisation (#183) |

| 2a8809fb | 2021-10-27 12:05:34 | Lino Galiana | Simplification des hooks pour gagner en flexibilité et clarté (#166) |

| 2e4d5862 | 2021-09-02 12:03:39 | Lino Galiana | Simplify badges generation (#130) |

| 4cdb759c | 2021-05-12 10:37:23 | Lino Galiana | :sparkles: :star2: Nouveau thème hugo :snake: :fire: (#105) |

| 7f9f97bc | 2021-04-30 21:44:04 | Lino Galiana | 🐳 + 🐍 New workflow (docker 🐳) and new dataset for modelization (2020 🇺🇸 elections) (#99) |

| 8fea62ed | 2020-11-13 11:58:17 | Lino Galiana | Correction de quelques typos partie ML (#85) |

| 347f50f3 | 2020-11-12 15:08:18 | Lino Galiana | Suite de la partie machine learning (#78) |

Citation

@book{galiana2025,

author = {Galiana, Lino},

title = {Python pour la data science},

date = {2025},

url = {https://pythonds.linogaliana.fr/},

doi = {10.5281/zenodo.8229676},

langid = {fr}

}